In cloud computing, Caching allows you to efficiently reuse previously retrieved or computed data. While a cache is a high-speed data storage layer that stores a subset of data, typically transient in nature. So that future requests for that data are served up faster. Unlike when it’s possibly accessed from the data’s primary storage location.

Have you ever heard the phrase “clear your cache” and wondered about this voodoo magic? In most situations, people are probably referring to your browser cache so that you can see the latest data or content on a website or application. When it comes to web performance, WordPress caching plugins are just one of those things that every site owner or webmaster has to deal with at one point or another. Of course, we all love WordPress, but it’s definitely not the only fastest platform. Especially if you compare it to a completely static site.

What is Caching?

Simply put, Caching is when a website is requested repeatedly by different clients. And then reusing the previously generated data (or requests such as database queries) to speed up new requests. We can also not forget about the process of purging the cache.

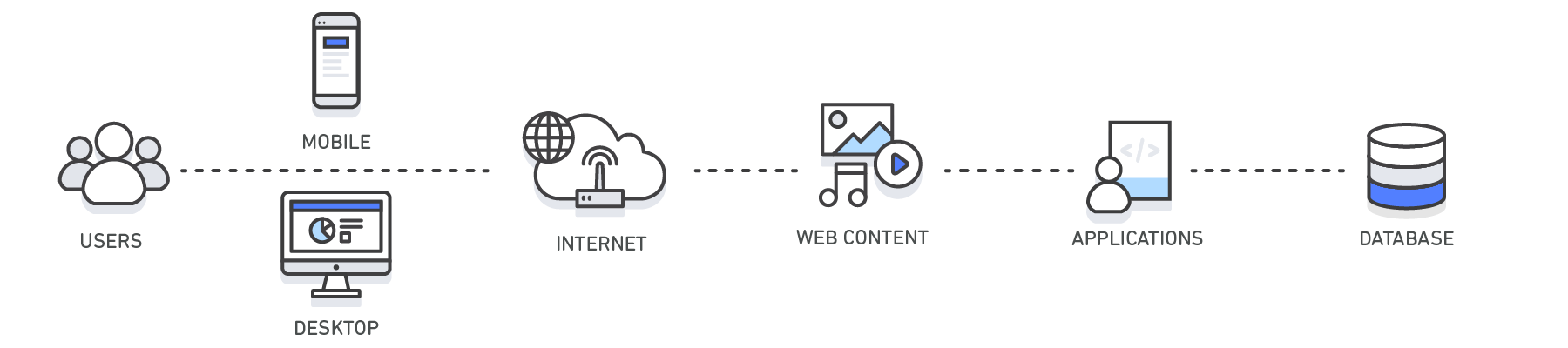

In short, when a user visits a page of your website, he loads all the elements. So there are a number of requests that are sent to the server. Each of them increases the loading time of the page. This is the principle of a dynamic website, every time the page is displayed by a new visitor, all elements are requested from the web server through queries.

This is useful if your content is changed at each visit, but in the case of articles or pages, this is not the case. This content is modified only when you make a change in the editor to update it. The rest of the time is always the same article. It is therefore useless to leave it in dynamics.

Related Topic: How to Choose a Web Hosting Solution Plan for Your Site

To give you an example of a query, the very content of your article (text, addresses of images, categories) will be loaded from your database. It will take several requests to do that. All of this is transparent to you and the visitor, but this has an impact on the server and the loading time of the page.

The larger your database (the more articles, comments, elements, etc.) contains, the longer it takes to load requests. You can also limit the revisions of WordPress articles to preserve your database a little. The data in a cache is generally stored in fast-access hardware.

Such as RAM (Random-access memory) and may also be used in correlation with a software component. A cache’s primary purpose is to increase data retrieval performance by reducing the need to access the underlying slower storage layer.

Trading off capacity for speed, a cache typically stores a subset of data transiently. In contrast to databases whose data is usually complete and durable.

Why Is Caching important?

Due to the high request rates or IOPS (Input/Output Operations Per Second) supported by RAM and In-Memory engines, caching results in improved data retrieval performance and reduces cost at scale. To support the same scale with traditional databases and disk-based hardware, additional resources would be required.

These additional resources drive up the cost and still fail to achieve the low latency performance provided by an In-Memory cache. Caches can be applied and leveraged throughout various layers of technology. Including Operating Systems, Networking layers including Content Delivery Networks (CDN) and DNS, web applications, and Databases.

You can use caching to significantly reduce latency and improve IOPS for many read-heavy application workloads. Such as Q&A portals, gaming, media sharing, and social networking. Cached information can include the results of database queries, computationally intensive calculations, API requests/responses, and web artifacts such as HTML, JavaScript, and image files.

Compute-intensive workloads that manipulate data sets, such as recommendation engines and high-performance computing simulations also benefit from an In-Memory data layer acting as a cache. In these applications, very large data sets must be accessed in real-time across clusters of machines that can span hundreds of nodes.

Due to the speed of the underlying hardware, manipulating this data in a disk-based store is a significant bottleneck for these applications. Other benefits include:

1. Improved Application Performance

Because memory is orders of magnitude faster than disk (magnetic or SSD), reading data from the in-memory cache is extremely fast (sub-millisecond).

This significantly faster data access improves the overall performance of the application.

2. Reduced Database Cost

A single cache instance can provide hundreds of thousands of IOPS (Input/output operations per second). While potentially replacing a number of database instances, thus driving the total cost down.

This is especially significant if the primary database charges per throughput. In those cases, the price savings could be dozens of percentage points.

3. Reduced Load on the Backend

By redirecting significant parts of the read load from the backend database to the in-memory layer, caching can reduce the load on your database.

It also protects it from slower performance under load, or even from crashing at times of spikes.

4. Predictable Performance

A common challenge in modern applications is dealing with times of spikes in application usage. Examples include social apps during the Super Bowl or election day, eCommerce websites during Black Friday, etc.

Increased load on the database results in higher latencies to get data, making the overall application performance unpredictable. A high throughput in-memory cache can solve this.

5. Elimination of Database Hotspots

In many applications, it is likely that a small subset of data, such as a celebrity profile or popular product, will be accessed more frequently than the rest. This can result in hot spots in your database. And may, therefore, require overprovisioning of database resources. Based on the throughput requirements for the most frequently used data.

Storing common keys in an in-memory cache mitigates the need to overprovision while providing fast and predictable performance for the most commonly accessed data.

Which are Caching Best Use Cases?

When implementing a cache layer, it’s important to understand the validity of the data being cached. A successful cache results in a high hit rate which means the data was present when fetched. While a cache miss occurs when the data fetched was not present in the cache.

Controls such as TTLs (Time to live) can be applied to expire the data accordingly. Another consideration may be whether or not the cache environment needs to be Highly Available, which can be satisfied by In-Memory engines such as Redis. In some cases, an In-Memory layer can be used as a standalone data storage layer in contrast to caching data from a primary location.

In this scenario, it’s important to define an appropriate RTO (Recovery Time Objective — the time it takes to recover from an outage). As well as RPO (Recovery Point Objective — the last point or transaction captured in the recovery) on the data resident in the In-Memory engine. In order to determine whether or not this is suitable.

And as can be seen, from the illustration above, design strategies and characteristics of different In-Memory engines can be applied to meet most RTO and RPO requirements. Let’s now look at some of the use cases below:

1. Database Caching

The performance, both in speed and throughput that your database provides can be the most impactful factor in your application’s overall performance. And despite the fact that many databases today offer relatively good performance, for a lot of use cases, your applications may require more.

Database Caching allows you to dramatically increase throughput and lower the data retrieval latency associated with backend databases. And as a result, it improves the overall performance of your applications. The cache acts as an adjacent data access layer to your database that your applications can utilize in order to improve performance.

A database cache layer can be applied in front of any type of database, including relational and NoSQL databases. Common techniques used to load data into your cache include lazy loading and write-through methods. For more information, in this article, you can see more details.

2. Content Delivery Network (CDN)

When your web traffic is geo-dispersed, it’s not always feasible and certainly not cost-effective to replicate your entire infrastructure across the globe. A CDN provides you the ability to utilize its global network of edge locations to deliver a cached copy of web content. Such as videos, webpages, images, and so on to your customers.

To reduce response time, the CDN utilizes the nearest edge location to the customer or originating request location in order to reduce the response time. Throughput is dramatically increased given that the web assets are delivered from the cache. For dynamic data, many CDNs can be configured to retrieve data from the origin servers.

For instance, Amazon CloudFront is a global CDN service that accelerates the delivery of your websites, APIs, video content, or other web assets. It integrates with other Amazon Web Services products to give developers and businesses an easy way to accelerate content to end-users with no minimum usage commitments.

3. Domain Name System (DNS) Caching

Every domain request made on the internet essentially queries DNS cache servers in order to resolve the IP address associated with the domain name. DNS caching can occur on many levels including on the OS, via ISPs, and DNS servers.

And as such, Amazon Route 53 is a highly available and scalable cloud Domain Name System (DNS) web service. In addition, Caching also allows for session management. HTTP sessions contain the user data exchanged between your site users and your web applications. Such as login information, shopping cart lists, previously viewed items, and so on.

Critical to providing great user experiences on your website is managing your HTTP sessions effectively. By remembering your user’s preferences and providing rich user context. With modern application architectures, utilizing a centralized session management datastore is the ideal solution.

4. Application Programming Interfaces (APIs)

Today, most web applications are built upon APIs. An API generally is a RESTful web service that can be accessed over HTTP and exposes resources that allow the user to interact with the application. When designing an API, it’s important to consider the expected load on the API.

As well as the authorization to it, the effects of version changes on the API consumers, and most importantly the API’s ease of use, among other considerations. It’s not always the case that an API needs to instantiate business logic and/or make backend requests to a database on every request.

Sometimes serving a cached result of the API will deliver the most optimal and cost-effective response. This is especially true when you are able to cache the API response. In order to match the rate of change of the underlying data. Say, for example, you exposed a product listing API to your users. And that your product categories only change once per day.

Given that the response to a product category request will be identical throughout the day every time a call to your API is made, it would be sufficient to cache your API response for the day. By caching your API response, you’ll equally eliminate pressure on your infrastructure too. Including your application servers and databases.

5. Caching for Hybrid Environments

You’ll also gain from faster response times and deliver a more performant API. Perse, Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale.

In a hybrid cloud environment, you may have applications that live in the cloud. And even require frequent access to an on-premises database. There are many network topologies that can be employed. In order to create connectivity between your cloud and on-premises environment including VPN and Direct Connect.

And while latency from the VPC to your on-premises data center may be so low, it may be optimal to cache your on-premises data in your cloud environment. In order to speed up overall data retrieval performance. Other use cases for Hybrid Environments include:

5.1. Web Caching

When delivering web content to your viewers, much of the latency involved with retrieving web assets such as images, html documents, video, etc. can be greatly reduced. By caching those artifacts and eliminating disk reads and server load.

Server-side web caching typically involves utilizing a web proxy that retains web responses from the web servers it sits in front of. While effectively reducing their load and latency. Client-side web caching can include browser-based caching which retains a cached version of the previously visited web content. You can learn more about Web Caching in detail.

5.2. General Cache

Accessing data from memory are orders of magnitude faster than accessing data from a disk or SSD. So, leveraging data in a cache has a lot of advantages. For many use cases that do not require transactional data support or disk-based durability. Using an in-memory key-value store as a standalone database is a great way to build highly performant applications.

In addition to speed, the application benefits from high throughput at a cost-effective price point. Referenceable data such as product groupings, category listings, profile information, and so on are great use cases for a general cache.

5.3. Integrated Cache

An integrated cache is an in-memory layer that automatically caches frequently accessed data from the origin database. Most commonly, the underlying database will utilize the cache to serve the response to the inbound database request given the data is resident in the cache.

This dramatically increases the performance of the database. By lowering the request latency and reducing CPU and memory utilization on the database engine. An important characteristic of an integrated cache is that the data cached is very consistent. Particularly, with the data stored on disk by the database engine.

How Amazon ElastiCache Works

Amazon ElastiCache is a web service that makes it easy to deploy, operate, and scale an in-memory data store or cache in the cloud. The service highly improves the performance of web applications. By allowing you to retrieve information from fast, managed, in-memory data stores. Instead of relying entirely on slower disk-based databases.

In a distributed computing environment, a dedicated caching layer enables systems and applications to run independently from the cache. With their own lifecycles without the risk of affecting the cache. The cache serves as a central layer that can be accessed from disparate systems. And with its own lifecycle and architectural topology.

Related Topic: Content Management System (CMS) | Blogging Platforms Best Picks

This is especially relevant in a system where application nodes can be dynamically scaled in and out. If the cache is resident on the same node as the application or systems utilizing it, scaling may affect the integrity of the cache. In addition, when local caches are used, they only benefit the local application consuming the data.

In a distributed caching environment, the data can span multiple cache servers. And even be stored in a central location for the benefit of all the consumers of that data. Learn how you can implement an effective caching strategy with this technical whitepaper on in-memory caching.

How WordPress Cache Plugins Works

When you install a cache plugin, it will turn your pages into static. This means that the first visitor to pass on your page will load all the elements for a dynamic page.

The difference is that the elements loaded by this first visit will be loaded into an HTML page. And then saved as a copy on the server as a static file (in a cache folder). The second (and subsequent) the visitor will no longer access the dynamic page. But the one that has been saved in the cache folder.

This will contain the elements that do not need to be reloaded each time the page is displayed. To resume the previous example, the text of your article will already be contained in the cached HTML file so no query will be used to retrieve it from the database. The example is for the text.

Related Topic: How to Purge Cache and Keep your Website content fresh

But many other elements will be directly contained in the HTML file without needing to be generated again. This will dramatically decrease the loading speed of your pages. The user experience will be improved and you will get better positioning in the search engines.

A WordPress cache plugin can make a large difference to your website. It is a process that creates static HTML pages of every other page on your website. It takes away the hassle of retrieving data from your database. Or executing a PHP code to display your page every time a visitor comes to visit your website.

So far, everyone knows how important a fast website is. And in that case, the quickest and most effective way to speed up your site web pages is by using caching tools (like the WordPress cache plugins). Having said that, out there are some WordPress plugins (both free & premium) you can consider for your site caching.

Top 10 Best WordPress Cache Plugins:

- Breeze: — Free WordPress Cache Plugin

- WP Rocket: — Most Popular Premium Cache Plugin

- WP-Optimize: — All-in-one WordPress Optimization Plugin

- SG Optimizer: — Free WordPress Plugin by SiteGround

- WP Super Cache: — Cache Plugin from WordPress.com

- W3 Total Cache: — Developer Friendly WordPress Cache Plugin

- WP Fastest Cache: — WordPress Cache Plugin With Minimal Configuration

- Comet Cache: — Free WordPress Caching Plugin

- Cache Enabler: — Best Lightweight WordPress Cache Plugin

- Hyper Cache: — Yet Another Free WordPress Cache

And now, everyone knows how important a fast website is. The quickest and most effective way to speed up your page is by using caching tools and plugins. Just like the ones listed above. But, of course, there are other more up-and-running plugins that didn’t manage on our list.

Therefore, if you’ll have additional contributions, you can let us know in our comments section. So that we include them on the list. Wouldn’t it be nice if you didn’t have to worry about figuring out which WordPress caching plugins are the best? You’ve all the answers now. But, test each of the features they offer to make an informed decision.

Takeaway,

Today, the Internet is all about speed! In order to rank well on search engines, one of the few attributes of the website is that it should be fast. Nobody wants to use a website that is slow. It drives away potential customers.

And then maybe, some of the users would simply click the back button before even viewing it. In reality, a fast-loading site is kind of a ‘make or break’ big deal. Especially if you build sites for clients or run an online business. But, keeping your website running at blazing-fast speeds requires a superheroic effort.

That’s why we employ superheroes like Hummingbird and WPSmush to keep your site and your images optimized. It’s also why we publish loads of content on ways to improve WordPress performance and boost your site’s speed.

Related Topic: Why Cloudflare is the Best for Web Performance & Security

That said, before even discovering the best WordPress cache plugins, one of the first things to know is the current situation of your website — using a free service like Pingdom Tools.

One of the improvements you will be recommended by Pingdom Tools is precisely to install a WordPress cache plugin. Not forgetting, the website allows you to get information on the loading time of your pages. And the improvements you can make.

Finally, please, let us know what you think about the Caching guide above. Whereby, you can share some of your additional thoughts and questions in our comments section below. You can also Contact Us if you’ll need more support or help.

Get Free Updates

Notice: All content on this website including text, graphics, images, and other material is intended for general information only. Thus, this content does not apply to any specific context or condition. It is not a substitute for any licensed professional work. Be that as it may, please feel free to collaborate with us through blog posting or link placement partnership to showcase brand, business, or product.