Welcome to the new marketplace for Meta AI Llama 3 Models that empowers an innovative technology space. Meta (the now father of Facebook, Instagram, and the like) doubles down on its commitment to open-source generative AI with the introduction of its most recent Llama 3 models. It’s a new “frontier-level” and open-AI-empowered model customizable per business needs.

Generally speaking, it’s worth noting that OpenAI may be the more well-known name when it comes to commercial generative AI, but Meta has successfully clawed out a place through open-sourcing powerful large language models. Meta revealed its largest generative AI model yet, Llama 3, which outperforms the likes of OpenAI ChatGPT on some standard artificial intelligence benchmark tests.

Meta AI, built with Llama 3 Technology, is now one of the world’s leading AI assistants that can boost your intelligence and lighten your load — helping you learn, get things done, create content, and connect to make the most out of every moment. In the coming months, its developers intend to introduce new capabilities, longer context windows, additional model sizes, and enhanced performance.

In this article, you’ll learn why the Meta AI development team is dedicated to developing Llama 3 — it’s an innovative AI assistant tool developed responsibly while still offering various resources to help others use it responsibly as well. This includes introducing new trust and safety tools with Llama Guard 2, Code Shield, and CyberSec Eval 2. With that in mind, let’s dive right into action.

Exploring How The Innovative Llama 3 Is Empowering Meta AI

Llama 3 is a Large Language Model (LLM) created by Meta. It can be used to create Generative AI, including chatbots that can respond in natural language to a wide variety of queries. The use cases Llama 3 has been evaluated on include brainstorming ideas, creative writing, coding, summarizing documents, and responding to questions in the voice of a specific persona or character.

Technically, the Llama 3 models are the latest innovative Meta AI developers’ creation in the series of LLMs developed with a focus on enhancing natural language understanding, generation, and interaction. Building upon the successes and lessons learned from its predecessors, LLAMA 3 introduces several key improvements that make it more powerful, efficient, and versatile than ever before.

Today, we’re excited to share the first two models of the next generation of Llama, Meta Llama 3, available for broad use. This release features pre-trained and instruction-fine-tuned language models with 8B and 70B parameters that can support a broad range of use cases. This next generation of Llama demonstrates state-of-the-art performance on a wide range of industry benchmarks.

It offers new capabilities, including improved reasoning. We believe these are the best open source models of their class, period. In support of the longstanding open approach, Meta is putting Llama 3 in the hands of the community. It wants to kickstart the next wave of innovation in AI across the stack — from applications to developer tools to evals to inference optimizations and more.

The four Llama 3 variants:

- 8 billion parameters pre-trained.

- 8 billion parameters of instruction fine-tuned.

- 70 billion parameters pre-trained.

- 70 billion parameters instruction fine-tuned.

Empowered by the innovative Meta AI technology, Llama 3 has limitless Generative AI capabilities that can be used in a browser or through a wide range of AI features. Eventually, the user-based Llama 3 application can be seen working in Meta’s Facebook, Instagram, WhatsApp, and Messenger. The model itself can be downloaded from Meta or major enterprise cloud computing systems.

The Meta AI Llama 3 Release Updates And Its Empowering Platforms

Driven by Large Language Models (LLMs), the Llama models have revolutionized the way we interact with technology, enabling unprecedented advancements in Natural Language Processing (NLP), machine learning, and AI-driven applications. Among the notable LLMs is the Large Language Model Architecture (LLMA) series, which has become a cornerstone in the marketplace.

It paves a new evolution in terms of conversational AI and related technologies. With the release of LLAMA 3, the latest iteration, the field takes another leap forward, promising even greater capabilities and performance. With Llama 3, Meta set out to build the best open models that are on par with the best proprietary models available today while addressing developers’ feedback.

The aim is to increase the overall helpfulness of Llama 3 and do so while continuing to play a leading role in the responsible use and deployment of LLMs. In addition, the Meta AI developers are embracing the open source ethos of releasing early and often to enable the community to get access to these models while they are still in development. Let’s consider the text-based models.

They are the first in the Llama 3 collection of models. Llama 3 was released on April 18 on Google Cloud Vertex AI, IBM’s watsonx.ai, and other large LLM hosting platforms. AWS followed, adding Llama 3 to Amazon Bedrock on April 23. As of April 29, Llama 3 is available on the central platforms.

Including:

- Databricks.

- Hugging Face.

- Kaggle.

- Microsoft Azure.

- NVIDIA NIM.

At the same time, it’s worth mentioning that hardware platforms from AMD, AWS, Dell, Intel, NVIDIA, and Qualcomm support Llama 3. Ultimately, the goal of the Meta AI development process is to make Llama 3 multilingual and multimodal, have longer context, and continue to improve overall performance across core LLM capabilities such as reasoning and coding.

Understanding What Makes Llama 3 Stand Out Versus The Competition

Llama 3, developed by Meta, is part of a new wave of open-source language models designed to push the boundaries of what AI can achieve. There are two main configurations: a compact 8-billion parameter version and a more powerful 70-billion parameter version. Both models deliver impressive language understanding and reasoning capabilities, making them ideal for a range of uses.

The applications of Llama 3 models may span from Natural Language Processing (NLP) tasks to more complex reasoning scenarios. What’s unique about Llama 3 is its flexibility and openness. Unlike many proprietary models, it’s fully open source, allowing developers to dive into the architecture, fine-tune it, and customize it to meet specific needs. This openness encourages innovation.

It also democratizes access to state-of-the-art AI, which is a huge step forward for the tech community. When evaluating Llama 3, the first question is: How does it stack up against big names like GPT-4 Turbo? The answer is nuanced. Llama 3’s 70-billion parameter model comes very close to GPT-4 Turbo in terms of language quality, yet it offers significantly faster response times.

This makes it perfect for real-time applications or scenarios where latency is a critical factor. One major advantage of Llama 3 is its pricing. For instance, GPT-4 Turbo charges around $15 for processing 1 million tokens, while Llama 3 does the same for just 20 cents. That’s a huge cost difference; you can run more experiments or serve more users without blowing through your budget.

An open-source state-of-the-art performance

Llama 3 is open source as Meta’s other LLMs have been. Creating open-source models has been a valuable differentiator for Meta. In a July newsroom post, the Meta AI development company founder and CEO Mark Zuckerberg explained his take on this philosophy. On July 23, the Meta AI Team announced Llama 3.1 405B, which is the most advanced version of Llama 3 yet.

There have also been major improvements to Llama 3.1 70B and 8B. Llama 3.1B has 405 billion parameters, making it competitive with GPT-4o, Claude 3.5 Sonnet, and the like. As long as it is used under the license terms, Llama 3 is free. You can download the models directly or use them within the various cloud hosting services listed above, although those services may have fees.

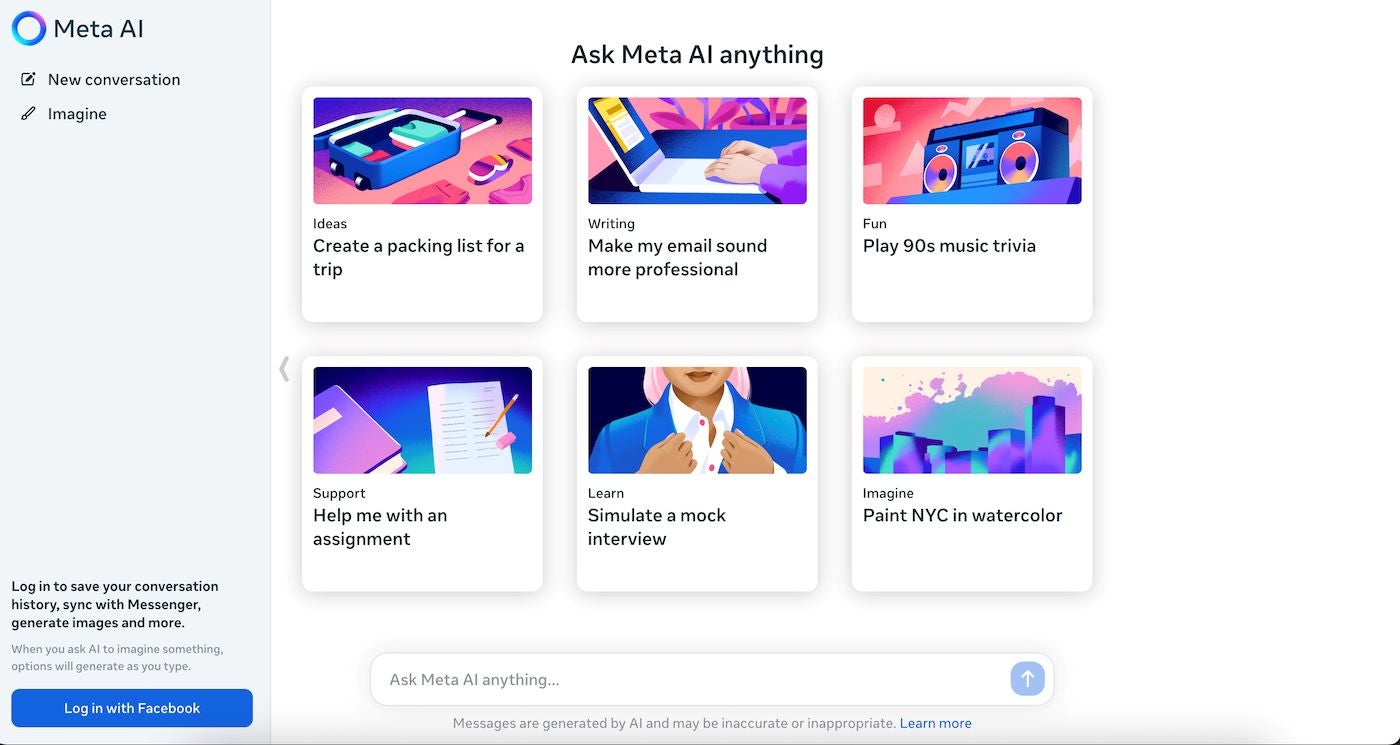

The Meta AI start page on a browser offers options for what to ask Llama 3 to do. However, it’s worth noting that Llama 3 is not multimodal, which means it is not capable of understanding data from different modalities, such as video, audio, or text. The Meta AI Team plans to make Llama 3 multimodal shortly.

However, there is some debate over how much of a large language model’s code or weights need to be publicly available to count as open source. Llama 3’s license agreement states that applications with more than 700 million monthly active users need to request a separate license. But as far as business purposes go, Meta offers a more open look at Llama 3 than its competitors do for their LLMs.

Shaping the competitive generative AI landscape

To make Llama 3 more capable than Llama 2, Meta added a new tokenizer to encode language much more efficiently. Meta souped Llama 3 up with grouped query attention, a method of improving the efficiency of model inference. The Llama 3 training set is seven times the size of the training set used for Llama 2, Meta said, including four times as much code. Meta applied new efficiencies to Llama 3’s pretraining and instruction fine-tuning.

Since Llama 3 is designed as an open model, Meta added guardrails with developers in mind. A new guardrail is Code Shield, which is intended to catch insecure code the model might produce. There are various open-source AI models you can fine-tune, distill, and deploy anywhere. Choose from the collection of models (download for free here): Llama 3.1, Llama 3.2, and Llama 3.3.

Llama 3 competes directly with GPT-4 and GPT-3.5, Google’s Gemini and Gemma, Mistral AI’s Mistral 7B, Perplexity AI and other LLMs for either individual or commercial use to build generative AI chatbots and other tools. About a week after Llama 3 was revealed, Snowflake debuted its own open enterprise AI with comparable capabilities, called Snowflake Arctic.

The increasing performance requirements of LLMs like Llama 3 are contributing to an arms race of AI-enabled PCs that can run models at least partially on-device. Meanwhile, generative AI companies may face increased scrutiny over heavy computing needs, which could contribute to worsening climate change.

Getting Started With The Innovative Meta AI Functionality Integration

Presuming you have already decided that generative AI is right for your business, choosing whether to use Llama 3 will probably come down to availability. Llama 3 can be used for free and customized within limits more so than its competitors. It may be more effective than its rivals like GPT-4 or Claude 3 for coding. However, Llama 3 has other competitors in the coding space.

For example, GitHub recently debuted Copilot Workspace, which is customized for coding and can create code based on natural language prompts. Llama 3 may be good for your organization if you want a general-purpose, open-source family of AI models. It outperforms OpenAI’s GPT-4 on HumanEval, which is a standard benchmark that compares the AI model’s ability to generate code with code written by humans.

Compared to GPT-4’s score of 67, Llama 3 70B scored 81.7. However, GPT-4 outperformed Llama 3 on the knowledge assessment MMLU with a score of 86.4 to Llama 3 70B’s 79.5. Visit Meta’s blog post to explore Llama 3’s performance on more tests. Be that as it may, if your system has the necessary hardware capabilities, you can set up and run Llama Chatbot on your local machine.

To do this:

- Visit the Meta AI website to download the required weights (files containing the model’s parameters)

- Head to the Llamma Chatbot Repository on GitHub, where you’ll find step-by-step instructions to install and set up the chatbot

By following the above steps, you can run the chatbot on your local computing machine, experiment with it, and explore its features. In addition, given the other free methods outlined in the next section, anyone can now tap into the power of this revolutionary language model. From exploring user-friendly platforms to leveraging open-source implementations, the possibilities are endless.

1. Meta AI Web

While helping developers integrate Llama 3 models, Meta is pushing the boundaries of AI through research, infrastructure, and product innovation. They are driven to build incredible things that connect people in inspiring ways. Because they can’t truly advance groundbreaking AI alone, they share their research and engage with the AI community to advance the science together.

Whether it be in AI Infrastructure, Generative AI, NLP, Computer Vision, or other core areas of AI, their focus is to connect people in inspiring ways through collaborative, responsible AI innovation. They are actively building remarkable things in key areas of AI that are shaping an AI-driven future. Accessing the Meta AI Platform directly is the best way to experience Llama 3.

Meta AI is available in a wide range of countries across the globe, including Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe. This cutting-edge artificial intelligence platform is accessible in English through Meta services, such as the Meta AI Website, Facebook, Instagram, WhatsApp, Messenger, etc.

2. AnakinAI

AnakinAI offers the Meta Llama-3 70B and 8B models on its AI tools platform, and it can be used for free. The AnakinAI Platform boasts hundreds of other AI apps, and you can also build apps yourself. You can use the 7B and 8B models in English and Japanese as follows (LLAMA 3 8B English Ver, LLAMA 3 8B Japanese Ver, LLAMA 3 70B English Ver, and LLAMA 3 70B Japanese Ver)

3. Perplexity AI Labs

Perplexity AI Labs, a part of Perplexity AI, provides a user-friendly platform for developers to explore and experiment with large language models, including Llama 3. With a simple and intuitive interface, you can easily select either the llama-3-70b-instruct or llama-3-8b-instruct model and start interacting right away. One of its notable advantages is its generous token limits.

Its generous token limits allow you to extensively test Llama 3’s capabilities without restrictions. For the llama-3–70b-instruct model, you can make up to 20 requests per 5 seconds, 60 requests per minute, and 600 requests per hour, with a token rate limit of 40,000 tokens per minute and 160,000 tokens per 10 minutes. The llama-3–8b-instruct model has similar limits.

It has a token rate limit of 16,000 tokens per 10 seconds, 160,000 tokens per minute, and 512,000 tokens per 10 minutes. Perplexity Labs is part of Perplexity AI, a search engine powered by OpenAI’s GPT models, and is worth exploring for its impressive capabilities and user-friendly interface.

4. HuggingChat

HuggingChat, an open-source interface developed by HuggingFace, allows users to engage with large language models, including Llama 3. Simply log in or create a free account, select the “Meta-Llama-3–70B-Instruct” model, and start conversing. The intuitive interface makes it easy to interact with Llama 3, although the free token amount is not specified. It’s ideal for everyday use.

It’s making the community’s best AI chat models available to everyone. A fast and extremely capable model matching closed source models’ capabilities. The goal of this app is to showcase that it is now possible to build an open-source alternative to ChatGPT. It aims to always provide a diverse set of state-of-the-art open LLMs, hence, they rotate the available models over time.

5. Replicate Playground

According to Replicate Playground, the innovative Artificial Intelligence (AI) technology can do extraordinary things, but it’s still too hard to use. As webmasters, we don’t believe AI is inherently hard — we just don’t have the right tools and abstractions yet. Thus, the Replicate Playground Team is building tools so all software engineers can use AI as if it were normal software.

You should be able to import an image generator the same way you import an npm package and customize a model as easily as you can. Replicate Playground allows experimenting with Llama 3 models without creating an account. Access the models through meta-llama-3–70b and meta-llama-3–8b. The simple interface makes it easy to start testing and comparing Llama 3 models.

6. Vercel AI Chatbot

An integration of Meta AI Llama 3 models with Vercel enables the world to ship the best products. Vercel’s Frontend Cloud provides the developer experience and infrastructure to build, scale, and secure a faster, more personalized web. Great care in experience and design enables everyone. Building and deploying should be as easy as a single tap.

To connect the world, sites should be fast from everywhere. Vercel Chat offers free testing of Llama 3 models, excluding “llama-3–70b-instruct”. Compare response quality and token usage by chatting with two or more models side-by-side. This feature provides valuable insights into the strengths, weaknesses, and cost efficiency of different models.

7. GROQ Tool Models

Another easy way to work with Llama 3 is through GROQ, a platform that simplifies interacting with these models. It’s like the OpenAI playground but for open-source models. You create an API key, select the Llama 3 model you want to use, and start running queries. We’ve tested it out, and the setup is straightforward. You’re ready to go after installing GROQ with a simple pip install groc.

While the Llama-3 Groq Tool Use models excel at function calling and tool use tasks, we recommend implementing a hybrid approach that combines these specialized models with general-purpose language models. This strategy allows you to leverage the strengths of both model types and optimize performance across a wide range of tasks.

Eventually, the Llama-3 Groq Tool Use Models will represent a significant step forward in open-source AI for tool use. With state-of-the-art performance and a permissive license, we believe these models will enable developers and researchers to push the boundaries of AI applications in various domains.

What The Future Holds: Why It Matters For Developers And CTOs

Meta AI’s continuous advancements signal a robust commitment to AI development. The release of LLama 3 not only steps up competition in the AI landscape but also enhances the accessibility and affordability of powerful AI tools. With its openness and the strategic release of these models, Meta continues to exert pressure on other industry players, thereby democratizing AI systems.

The excitement doesn’t stop at Llama 3. Meta is already working on Llama 4, a model that will reportedly have 400 billion parameters. The aim is to improve not just performance but also the multimodal capabilities, allowing the model to handle text, images, and potentially other data types simultaneously. This could open up new possibilities in fields like computer vision and robotics.

Llama 3’s combination of high performance, low cost, and open-source nature makes it a compelling choice for companies looking to integrate AI without being locked into expensive licensing agreements. It’s also a great option for research teams and developers who want to experiment, fine-tune, or extend AI capabilities without hitting a wall on proprietary restrictions.

In the architecture space, where efficiency and scalability are crucial, Llama 3 allows us to build robust applications that leverage state-of-the-art AI, all while keeping infrastructure costs manageable. And because it’s open source, we have full transparency and control over how we use it.

In Conclusion;

It’s worth mentioning that as the leading software architects behind the Web Tech Experts Agency, we’ve spent a lot of time evaluating different language models, and we’re excited to share why Llama 3 is a game-changer for those of us working on cutting-edge AI projects. If you’re an engineer or team lead looking to adopt AI, we highly recommend giving the Meta AI Llama 3 a try.

As Meta AI Llama 3 Models continuously keeps rolling out, it’s clear that this new model will be a cornerstone for developers looking to harness cutting-edge AI capabilities. On the one hand, whether you’re experimenting with AI-powered applications or integrating sophisticated AI features into existing platforms, LLama 3 offers the tools and performance you need to succeed.

On the other hand, whether you’re building chatbots, doing complex data analysis, or developing new NLP features, Meta AI Llama 3 is a solid, versatile, and budget-friendly contender. And as Meta continues to invest in this space, we’re excited to see how it’ll further reshape the AI landscape. So, go ahead and give it a spin. Let’s see what we can build together with this powerful tool!

Explore Related Resource References:

- MuAViC Is The First Audio-Video Speech Translation Benchmark

- Segment Anything Model (SAM) | Producing High-Quality Object Masks

- How Meta Movie Gen Could Usher In A New AI-Enabled Era For Content Creators

- Llama 3.2: Revolutionizing Edge AI And Vision With Open, Customizable Models

- MultiRay | Optimizing Efficiency For Large-Scale AI Foundational Models

Be sure to explore the new Llama release and discover how it can elevate your AI projects. Remember, you can also subscribe to our future blog posts for more updates on LLama 3 and other AI advancements, and check out the detailed Meta AI tests and future posts to learn more about what LLama 3 can do for you. You are also welcome to share your thoughts in our comments section.