Nowadays, there are a variety of speech-to-text apps, devices, and tools for deaf or hard-of-hearing employees to solve a key issue with accessibility — inclusion for those along the disability spectrum. Bear in mind, many corporate accessibility options are: ‘We could put you in touch with an American Sign Language [ASL] interpreter.’ That’s not a solution.

Suffice it to say, not everybody who has hearing loss knows ASL, by a long shot. Now with so many people staying home due to ongoing COVID-19-related restrictions, and with the introduction of face masks that hinder lip reading, deaf and hard-of-hearing people are turning to technology to assist in daily activities.

Gartner predicts speech-to-text and automated natural language generation will keep growing over the next 10 years. Elliot also sees a rising trend of open source, as tech giants like Microsoft’s AI lab and Google are keeping their models open in order to attract new talent, researchers, and students.

For the millions of people who are hard of hearing, speech-to-text advancements have improved their ability to complete daily tasks — but the tech still has a long way to go. For the 11 million US residents who are hard of hearing or deaf, the development of AI speech recognition tech is not just hype. It’s a sign of hope for tools that may eventually help get through the day.

The Road To Speech To Text Communication Channels

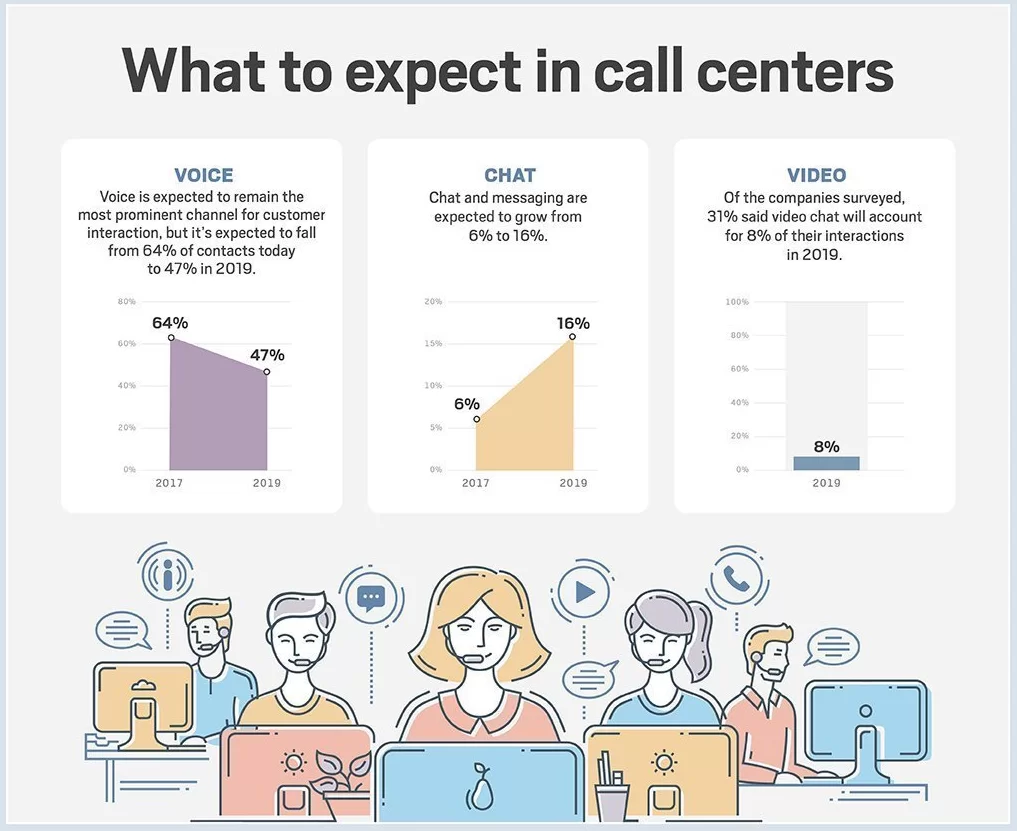

Automated Speech Recognition technologies can range from simple transcription services in call centers to dating app matching algorithms, but a burgeoning consumer use case focuses on software and apps aimed at people who are deaf and hard of hearing.

Bern Elliot, research vice president and distinguished analyst of artificial intelligence and customer service at Gartner, said the first generation of rule-based speech technology was made of separate algorithms that transformed WAV file data into sound, then mapped those sounds to words.

That rule-based speech technology produced a transcription that statistical grammar models learned to recognize as speech. Now the term “speech to text” reigns supreme. Speech-to-text technology excels in being able to caption in real-time.

Most current STT algorithms use neural networks and machine learning models to create transcripts. For example, Microsoft’s STT uses two machine learning models that work together — the first takes spoken language and translates it to text and the second model takes written language and translates it how people would like to read it, Elliot said.

Resource Reference: Speechelo | A Powerful Software Tool For Text to Speech

Elliot said everyone who has been to an airport, or bar, or used closed captioning on the news is familiar with speech recognition technology, usually at the sentence level.

Automated speech recognition platforms frequently automate at the sentence level in order to allow a machine learning algorithm to incorporate context. When translating or transcribing at the word level, an algorithm goes word by word to create an output, which can sometimes result in a broken sentence or mismatched meaning.

At the sentence level, algorithms begin building transcriptions word by word, then readjust to form a complete sentence, often changing previous words after considering the semantic and contextual meaning of the full thought. Of all the featured STT vendors in Gartner’s market report, all but one vendor use real-time technology at the sentence level, word level, or both.”

At the sentence level, algorithms begin building transcriptions word by word, then readjust to form a complete sentence, often changing previous words after considering the semantic and contextual meaning of the full thought. Of all the featured STT vendors in Gartner’s market report, all but one vendor use real-time technology at the sentence level, word level, or both.”

When you’re doing it at the word level, there are some words that sound the same, and until you have the sentence context, it may be difficult to know for sure. So [the algorithm] does a best guess,” Elliot said.”It knows when it gets further in, ‘I should have said this.’ So it changes [the word] in sentence level.”

How Speech-To-Text Apps Usually Work

Speech-to-text is used for speech recognition and translating spoken words into text through a computer or app. Speech-to-text is also referred to as computer speech recognition or speech recognition. Additionally, its best uses are saving notes on your phone through dictation, media subtitling, clinical documentation, call analytics, and media content search.

Technically, speech-to-text apps are handy for everyday use and stand out from transcription software, which is often more time-consuming to use. The primary difference between the two is that speech-to-text apps will take live speech and transform it into readable content. For example, a speech-to-text app can create a text copy of what you dictate to your phone.

In contrast, transcription software tools will create a text copy of an audio file. However, transcription apps almost always require you to upload a pre-recorded audio file, adding one more step to the process. With that in mind, there are some criteria for choosing the best speech-to-text apps for Android and iPhones that webmasters should know first.

Consider the following key factors:

- Accuracy: A guaranteed accuracy level or customers experiencing strong accuracy with the speech-to-text app was a hugely important factor when choosing apps for our list. Accuracy is a crucial feature that ensures the app will save you time in the long run because you won’t have to go through and extensively edit the transcript.

- User-friendliness: User-friendliness is crucial to the functionality of any app. If we found the app unwieldy, difficult to use, or not organized intuitively, we didn’t include the app on our list.

- Pricing: We looked for apps that are reasonably priced and included the prices throughout our list so that you are aware of all fees going into using the app.

- High customer ratings: We checked that the following speech-to-text apps for Android and iPhones had high customer ratings and satisfaction online.

- Multi-language support: We looked for apps with multiple-language support to fit many language needs.

- App compatibility: Speech-to-text apps that are compatible with other apps or integrate within other apps were prioritized on our list. This is an essential feature if you want to use the app among multiple platforms and apps.

- No additional software required: We prioritized apps that only needed one software or app to use and skipped any apps that required multiple downloads or software to operate thoroughly.

- Security features: We looked into the app’s security and privacy measures to protect your private information.

As you can, those are among the basic features of some of the best and quality speech-to-text apps that you’ll need. Luckily, together with our partner, we even went ahead and spent hours researching the best speech-to-text apps available — for their usability, accuracy, pricing, and much more. So, here are the 12 best speech-to-text apps for Android and iPhones.

Using Interactive Widgets Plus Natural Language Interfaces

A natural language interface lets users interact with the app using human language. For example, a modern search engine lets users enter simple, plain-language queries like “What’s the capital of Kenya?” Most Search Engines don’t require queries to include Boolean Operators or special configuration data; they interpret input by processing natural language directly.

Because of a natural language interface’s ability to accept input in whichever ways users choose to enter it, this approach — when implemented well — delivers the simplest, most consistent, and most efficient user experience. Natural language design makes it easy to tailor a single interface to different users’ needs.

Obviously, because individuals engage with the app using whichever words make sense to them. When paired with tools that can translate speech to text, a natural language approach enables users to input information using speech rather than a keyboard. For mobile applications, this is a critical advantage, because users can interact with your app on the go.

Resource Reference: UserWay Accessibility Widget | Basic Installation & Activation Steps

The major challenge in natural language design is that interpreting natural language queries requires sophisticated algorithms. And, when implemented poorly, it can deliver results that don’t match the request. Because of this limitation, this design makes the most sense when an app focuses on a specific domain or offers a limited range of functionality.

In that case, it’s easier to define the scope of possible queries that a user might enter and write algorithms that can interpret them effectively. For example, consider a simple digital thermostat app that lets users set the temperature by talking into their phones. This would be a good candidate for natural language design.

Simply because there aren’t many words that the interface would need to interpret to determine user intent. A banking app that offers a range of functionality — from checking balances to transferring money to paying bills — might be more difficult to run through a natural language interface.

Automated Speech Recognition (ASR) Plus Their Daily Functioning

Both STT Technologies and Automated Speech Recognition (ASR) widely vary in the use case. But, consumers frequently engage with transcription services — some that provide quick results, and some that may transcribe the text in a few minutes. For accessibility uses, the speed at which STT can perform transcription must be nearly immediate.

Michael Conley, a deaf San Diego museum worker who uses STT technologies like Innocaption, a California-based mobile captioning app, said that real-time captioning allows him to complete activities like filling prescriptions, holding interviews, and having long phone calls.

“I’ve talked to people and they don’t know until afterward that the call has gone through artificial intelligence or a live stenographer,” Conley said. “There’s never been a situation where I needed to reveal that I was using an AI-based ASR.”After recently losing his job, which provided him access to desktop-based tools, getting a mobile app was a high priority for Conley.

His experience highlights the disparity between desktop tools, which are common, and mobile apps, which are rare. Many STT technologies are limited to certain devices, apps, or processing systems. Elliot said speech-to-text technology for deaf and hard-of-hearing people needs to be multimodal, meaning it can be used across devices seamlessly.

He predicted that this shift will happen within the next five years.

Some Speech-To-Text Apps Notable Limitations To Consider

One of the larger issues with creating and implementing STT technologies is training algorithms. Elliot said that oftentimes, people don’t speak the way they would write, and vice versa. There are colloquial terms, inferences, inflections of voice, and other nuances that change a word’s meaning.

Training models on written data for speech output, or speech data for written output, doesn’t always work. The intricacies of human language all need to be represented in data sets used to train the machine learning algorithm. “I’ve had vendors tell me that it used to be a battle of the algorithms. But a lot of algorithms are now open source.

The difference is increasingly who has better data for training,” Elliot said. Elliot added that STT can be hard to get right from a developer’s point of view, which is why the apps preferred by customers are constantly changing. “[STT models] take a lot of a lot of capabilities, a lot of technical ability, and a lot of data. You have to have a lot of skill to make these work,” Elliot said.

“However, it’s within reach of a knowledgeable data science and machine learning [developer], because a lot of the algorithms are public now.” Another limitation is that developers need to take a slightly different mindset when building speech-to-text tools for deaf people.

Useful Tips For Using Speech-To-Text Apps

Is voice-to-text software secure? Of course, yes, voice-to-text software is reasonably secure. As with anything, there is some risk to your security in using any software. However, voice-to-text software removes the human transcription element, decreasing the risk of security leaks or information being read by other humans.

The best speech-to-text apps have many great uses, from saving you time, freeing up your hands, and creating a neat transcription of your dictation. We hope this guide to speech-to-text apps inspires you to use a great speech-to-text app to improve productivity and save time during busy work or school schedules.

For instance, Notta allows you to focus on current conversations, organize your transcriptions, and generate valuable documents, such as work memos, reports, and video subtitles from your transcriptions. You should follow a few essential tips when using speech-to-text apps to keep your transcription as accurate as possible.

Below are some of them:

- Speak clearly and loudly. The app should be able to hear you clearly to maintain the highest level of accuracy for the transcription. Use a microphone, if possible, to heighten your voice and reduce background noise. In addition, we recommend speaking slowly and enunciating clearly so that the app doesn’t misunderstand your words.

- Avoid background noise as much as possible. Background noise can skew the accuracy of your transcription and lead to gaps in the text.

- Outline a draft first. If you are dictating a voice memo or email, go over your notes or outline first. This can help you avoid pauses and errors while dictating.

- Emphasize while you speak. Take pauses before sentences, and don’t be afraid to take a long pause to collect your thoughts.

- Learn voice commands. Many speech-to-text apps offer dictation commands, such as “comma,” “period,” or “open quotes,” so that you can add punctuation to your transcription easily. Many of these apps also allow you to insert emojis as you go. Using voice commands will save you time in the long run by cutting down on edits later.

- Choose the best app for your situation. Take a moment to assess the many speech-to-text apps available and choose an app that is best fitted to your needs. For example, if you plan on having multiple speakers for dictation, select an app advertised as good at identifying multiple speakers. Likewise, some apps offer many more supported languages and dialects, so if you have a strong accent or want transcription that translates to other languages, choose one of those apps.

- Proofread your transcriptions. Before sending your transcription to a colleague or fellow student, take a moment to look over the transcription for mistakes. Even the best speech-to-text apps will make occasional errors, so always take a moment to double-check for mistakes to avoid any embarrassing moments.

- Use placeholder commands. Check if your speech-to-text app offers the ability to use a placeholder word. The placeholder word will signify to your app to insert a phrase, jargon, or email signature instead of needing to state the entire phrase each time you dictate to your app.

Summary Notes:

Despite the fact that data scientists typically strive for the highest level of accuracy possible when developing machine learning models, Conley noted that for end users, any level of audio transcription automation is helpful. In comparison to other AI and machine learning technologies, STT for deaf users is helpful in any capacity.

This means developers should focus on producing tools that are useful, even if they’re not perfect. Using free speech-to-text software or paid speech-to-text software is entirely up to you. However, free speech-to-text software often lacks quality technical support and may not have the best accuracy or speed.

Much speech-to-text software also lacks capacity, so you may be limited by a small number of recording time. Lastly, free speech-to-text software may require a more significant time commitment on your part because lower accuracy may require you to make additional edits that you wouldn’t otherwise need to do.

Good post! We will be linking to this particularly great post on our site. Keep up the great writing